Stop Chat Control

The European Union is about to implement legislation that will mean all your communication will be monitored. You probably haven't even heard of it.

Download our pdf: Stop chat control

Create safe internet spaces for children instead of making the internet unsafe for everyone.

This year, the European Commission will be working on a legislative proposal called Chat Control. The proposal aims to have all EU citizens’ communications monitored and scrutinized. This means that all of your phone calls, video calls, text messages, every single line that you write in all kinds of messaging apps, your e-mails, and chat conversations in video games and dating apps — yes, all of this — will be filtered in real time and potentially flagged for a more in-depth review. This also applies to images and videos saved in the cloud, i.e. the legislation essentially impacts everything you do with your smartphone. In other words, your personal life will be fully exposed to government scrutiny. Most people have never even heard about this legislation despite the fact that it will affect all European Union citizens. It’s about time to start discussing this proposal and ensure it gets revoked (at least for those of us who want to defend human rights and democratic processes within the EU).

The European Commission claims that the legislation is needed to detect and prevent child pornography (CSAM) and child grooming. The problem is that this legislative proposal won’t help children much. Instead, it will make the internet an unsafe space for everyone. Further on in this material, we’ll outline the huge risks threatening us if this rule becomes reality. But first, we’ll explain how Chat Control is meant to work, and how it results in a complete violation of your privacy.

The end of encrypted communication: When you believe that you are having a private conversation with someone, there will always be a third person in the conversation.

How will the EU go about creating a massive surveillance system that will monitor the communications of each and every EU citizen? Since this legislative proposal also affects encrypted messaging services, there will be two options. Either the EU will demand a back door to all communication, which means that each and every conversation will have a third-party listener, or, alternatively, each communication will need to be intercepted on the telephone or computer before it gets sent and leaves the device. Both of these options mean an end to private conversations within the EU. That, in itself, will have disastrous consequences, but more on that later. First and foremost, consider the huge number of communications that will need to be monitored and sorted.

Artificial Intelligence will flood law enforcement with photographs from family vacations and intimate text messages between 20-year-olds.

If this legislation becomes a reality, the EU will demand that all companies offering digital services comply with Chat Control to fulfill their obligations. All digital companies will need to make sure that AI tools are scanning all their user communications for CSAM or sexually related communication between adults and children. Then, they will either need to review the material themselves or send it to a new EU center that will need to be built (we have many dystopian suggestions for its name, but we’ll save that for later).

To repeat: AI will scan every single bit of every citizen’s communications in search of this material. How well do you think that will go? European law enforcement authorities have tried searching for already known material, and even then, 80-90 percent of the hits were false (i.e., completely ordinary, legal images). Chat Control also requires the detection of “new material”, meaning that the AI tools will have to decide whether the material is illegal or just intimate text messages between partners, naked pictures between 20-year-olds, holiday pictures from the beach, or poolside photos from the family album when swim pants ended up on top of someone’s head. This system will collect an endless amount of (completely legal) private images and conversations from the entire EU population. This is an unprecedented violation of privacy. And the question is, what will happen to all those images?

We have read stories about private security firms sending material around the office, material that they shouldn’t be passing around. We have heard Edward Snowden tell us how NSA employees used to sit and look at naked women. Why should all the employees that run this surveillance operation be expected to perform any better? And who are they, anyway? Why have they applied for these jobs, and how can we be sure they won’t disseminate this material, including the illegal images they will be hired to detect? But those aren’t even the biggest issues that we’ll face with Chat Control. There’s more.

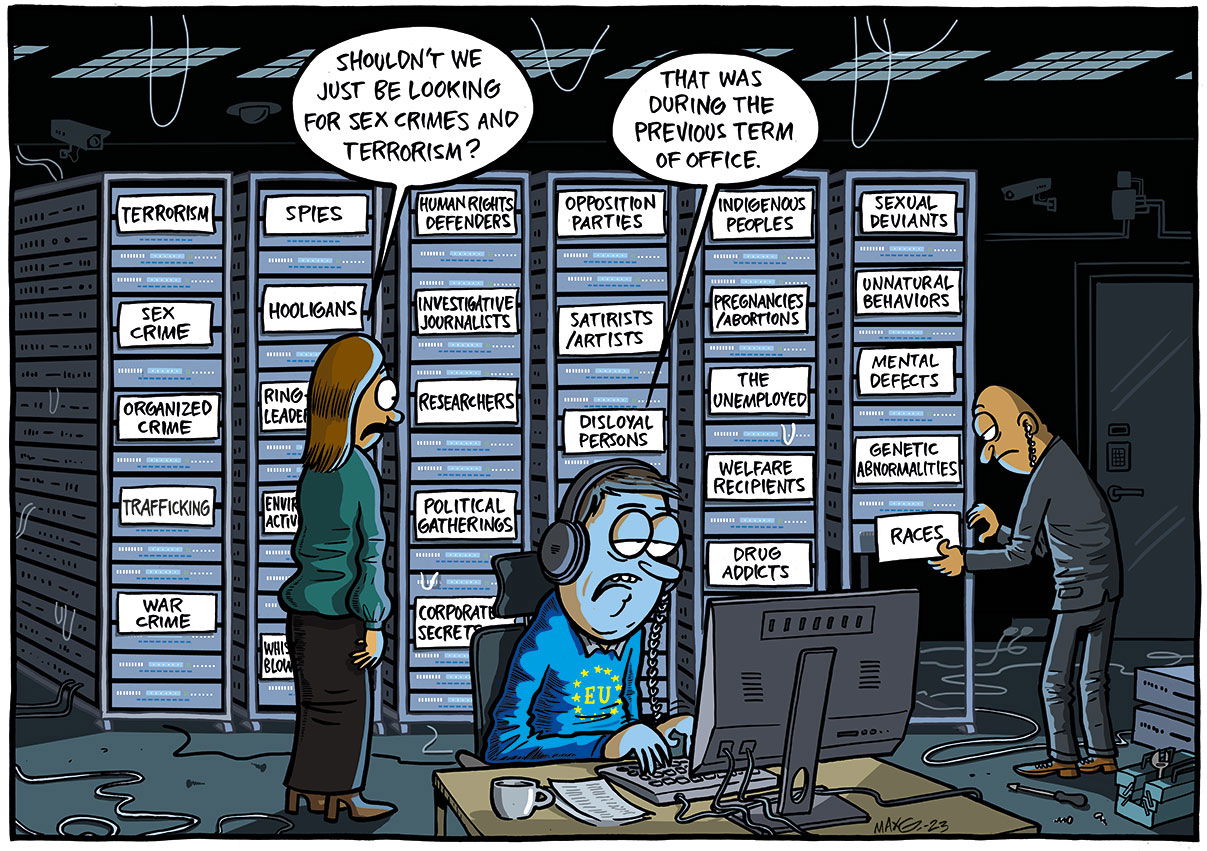

Total mass surveillance was once seen only in totalitarian states. What will this massive system search for in the future?

This system’s implementation will inherently mean a total violation of privacy. But there’s something else to worry about, namely, how this system could be used by the different governments within the EU. Once the system is put in place, encrypted messaging services will be a thing of the past, and all human communications will be subject to interception. Afterward, the system might be reconfigured at any point in time to search for other things. In 2022, five different EU governments were criticized for having monitored political opponents. What do you think governments will use this tool for? Think about the most authoritarian EU country you know of. Then think about this mass surveillance system. Will we, as citizens of the EU, even be told which “additional searches” our intelligence services and the people in power are carrying out? Or will we depend on whistleblowers (even though blowing the whistle will certainly become much harder without encrypted messaging services)?

We can compare this independent system of mass surveillance with the existing surveillance in the United States where the authorities have a back door to social media companies, and the big tech giants are already performing these types of searches. What makes this EU proposal even worse, though, is that it also includes encrypted messaging services. In the United States, it is still possible to send secure messages through encrypted services. If Chat Control becomes a reality, Europe will take the lead as the most technologically dystopian place in the Western world.

Chat Control would endanger everyone’s online security.

Since Chat Control would mean the end of encrypted messaging services, we will never be

sure that our communications won’t leak. That, in itself, is a problem for companies working

on a new product they seek to patent. It is a problem for people who have digital contact

with a psychologist, lawyer, doctor, or other professional relationships where confidentiality

is involved. All of these communications are at risk of leaking if the auditor happens to be the

wrong person (someone with ulterior financial motivations, someone under another type of

pressure, someone who just wants to share the material, or someone who has a hard time

keeping quiet in front of colleagues and friends), or if the conversation is hacked. Data leaks

will take on a whole new meaning with Chat Control.

Seriously: doesn’t the European Commission realize that an internet where all

communication is at an elevated risk of being hacked or leaked is a Pandora’s box that

shouldn’t be opened?

The people who truly have something to hide are the ones who will be affected the most. Among those are whistleblowers, anonymous sources, journalists working in authoritarian countries, political opponents in states where the opposition is persecuted, and people with secret identities hiding from violent relationships, not to mention children who need to reach out to an adult and be sure that their conversation won’t leak.

This legislative proposal violates human rights. Isn’t that enough to toss it on the garbage heap?

This is basically about what kind of European Union we want. The proposal violates the European Convention, the EU Charter of Fundamental Rights, and the UN Declaration on Human Rights which states that everyone has a right to privacy and private correspondence. The EU Data Protection Authority strongly criticizes this proposal and the UN Human Rights Commissioner has issued a warning about it. But the European Commission already knows this and even mentions it in the actual proposal: “The proposal affect the fundamental rights to respect for privacy, to protection of personal data and to freedom of expression and information. Whilst of great importance, none of these rights is absolute.”

Looking at how mass surveillance has been carried out historically, we see plenty of examples of when its purpose has shifted. Purposes can shift drastically in this field. Nobody will ever be sure whether somebody is listening to them, and when people feel monitored, they start self-censoring. At first, we will think twice about writing anything intimate to our partner and reconsider which photos we take. Then, as we sense that the system might be checking other things as well, we will adjust accordingly. Eventually, self-censorship will lead to a limitation of free speech, even in private settings. The change won’t be sudden; rather, it will sneak up on us. Installing these types of back doors will be the point at which our freedom will slowly slip away. When we are deprived of private conversations, we are undermining a cornerstone of democratic society.

Chat Control won’t even help children.

In this text, we won’t discuss how poorly written this legislative proposal is, how difficult it will be to implement, or what it would mean to the economy, technical development, and business environment within the European Union. The proposal is so intrusive and offensive that it cannot be interpreted as anything other than using children as an excuse for total mass surveillance. But if we disregard all that and focus on a few lines about its alleged purpose, it is obvious that Chat Control won’t even protect children.

Let’s start with flagging false material. This will flood the authorities with work, stealing

resources from tracking down criminals. Law enforcement doesn’t have time to follow up on

all ongoing cases even now. They are piling up, waiting their turn. Implementing Chat Control

would cost incredible amounts, and that’s just to keep the bureaucracy, software, and

maintenance up and running. Not to mention all the employees that will need to follow up

on everything that’s reported. Imagine if that money could be spent on tracking down

criminals instead.

Chat Control wouldn’t even catch the criminals since they always find other means. They

won’t use the commercial channels they know are being intercepted. They will encrypt their

messages themselves (it requires a lot of work, but it can be done if the motivation is

present) or start using illegal encrypted services. They will work through links that won’t get

caught in the filters and use ZIP archives. If the law and technology adjust, so will they.

Resources need to be spent on safe spaces for children so that the police can focus their time and resources on tracking down criminals.

There is no easy solution to combatting CSAM and child grooming, but one thing is certain: implementing Chat Control won’t solve the problem. We have no ready-made solution, but we would like to help guide the discussion in a different direction.

Before we start discussing what we see as a way forward, we think it is relevant to tell you that Chat Control will also forbid children from using social media. That seems to be the European Commission’s solution to child grooming, which we think clearly shows just how detached from reality the Chat Control legislation really is. The proposal simply requires that all app stores impose secure age verification controls (which makes it impossible to use these services anonymously) and block children from using services where they might have contact with adults (which means all apps). Even though we may see some positive effects on children, such as spending less time on social media, we still believe there is another solution to solving the issue of online child grooming.

We suggest that, instead of Chat Control, we can investigate and work toward a technical

solution that makes it easier for (some) social media platforms to become safe for children to

use. For instance, we suggest requiring reliable identification and prohibiting conversations

between adults and minors unless approved by an identified parent as well as imposing

other suitable requirements for being certified as a “platform safe for children”.

There are certainly social media platforms today that would be interested in obtaining such a

certification. Big actors could then share the relevant technology with smaller platforms,

meaning that such a tool could be shared widely without smaller actors having to spend too

much money or resources on it.

This wouldn’t include all platforms. Moving in this direction would mean that social media

platforms that want to contribute to safe spaces for children can do so. At the same time,

people who actually have something to hide and must remain anonymous for their own

safety can use other messaging services.

Building an infrastructure like this would require a lot of work. There are several questions that need to be investigated: What will the identification process look like? How can you avoid older siblings helping younger children with false identification? How can you be sure that criminals won’t use their children’s identities? A technical solution such as this one might have to be combined with other tools that make it easier for parents to keep track of their children. There are several aspects to consider here, but we hope that with the right kind of expertise, this is an area where good work could be done. Creating safe spaces for children won’t be accomplished in the blink of an eye. However, it would require considerably less work than the colossal apparatus that Chat Control would demand.

It’s true that safer spaces for children on the internet would not stop the proliferation of already-existing material. But neither would Chat Control. Law enforcement should focus on the existing problem instead of scrolling through the entire European Union’s family albums.

Mullvad is a Swedish VPN company, and our business isn’t directly affected by a possible Chat Control regulation. Nevertheless, we strongly oppose this legislative proposal because we care about human rights and are passionate about personal integrity. Our society will perish without the right to private conversations.

We urge all politicians in Europe to focus their resources on tracking down criminals instead of monitoring entire populations.